The Llama2 models follow a specific template when prompting it in a chat style including using tags like INST etc In a particular structure more details here. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 13B fine-tuned. Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. Whats the prompt template best practice for prompting the Llama 2 chat models Note that this only applies to the llama 2 chat models The base models have no prompt structure. In this post were going to cover everything Ive learned while exploring Llama 2 including how to format chat prompts when to use which Llama variant when to use ChatGPT..

Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. Meta developed and publicly released the Llama 2 family of large language models LLMs a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70. This release includes model weights and starting code for pretrained and fine-tuned Llama language models Llama Chat Code Llama ranging from 7B to 70B parameters. Description This repo contains GGUF format model files for Metas Llama 2 7B About GGUF GGUF is a new format introduced by the llamacpp team on August 21st 2023. With Microsoft Azure you can access Llama 2 in one of two ways either by downloading the Llama 2 model and deploying it on a virtual machine or using Azure Model Catalog..

We will cover two scenarios here. In this notebook and tutorial we will fine-tune Metas Llama 2 7B Watch the accompanying video walk-through but for Mistral here If youd like to see that notebook instead. 230729 We release two instruction-tuned 13B models at Hugging Face See these Hugging Face Repos LLaMA-2 Baichuan for details 230719 Now we support training the LLaMA-2 models. In this part we will learn about all the steps required to fine-tune the Llama 2 model with 7 billion parameters on a T4 GPU. This jupyter notebook steps you through how to finetune a Llama 2 model on the text summarization task using the samsum..

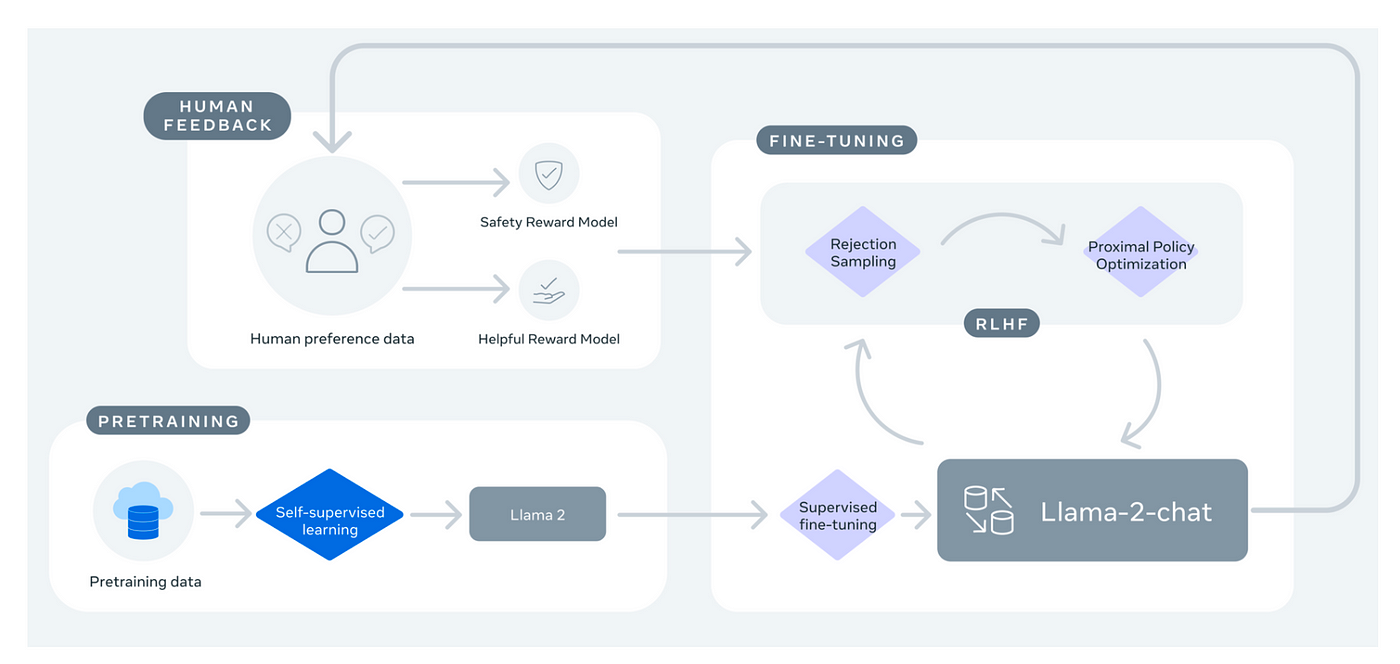

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Jose Nicholas Francisco Published on 082323 Updated on 101123 Llama 1 vs Metas Genius Breakthrough in AI Architecture Research Paper Breakdown First. 6 min read Oct 8 2023 Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. In this work we develop and release Llama 2 a family of pretrained and fine-tuned LLMs Llama 2 and Llama 2-Chat at scales up to 70B parameters On the series of helpfulness and safety..

Comments